Member-only story

CNN Explainer - Interpreting Convolutional Neural Networks (1/N)

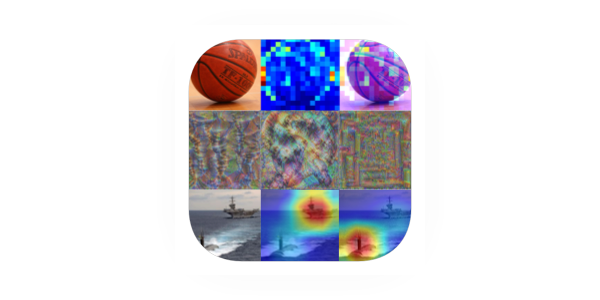

Generating Area Importance Heatmaps with Occlusions

In today’s article, we are going to start a series of articles that aim to demystify the results of Convolutional Neural Networks (CNNs). CNNs are very successful in solving many Computer Vision tasks, but as they are Neural Networks after all, they may fall into the category of ‘black box’ systems, that don’t provide explanations of their predictions out of the box.

However, in this project, we are going explain their behaviors by visualizing learned weights, activation maps, and occlusion tests. By the end of this series, you will be able to reason not only about what CNNs are predicting but also about why are they doing so.

Why Interpretability Matters?

In the Machine Learning and Computer vision communities, there is an urban legend that in the 80s, the US military wanted to use artificial neural networks to automatically detect camouflaged tanks.

To do it, they took a bunch of pictures of trees and bushes with tanks behind them and some pictures with the same trees and bushes, but without the tanks. They believed that with such an approach, the system would be eventually able to detect whether an image contained a hidden tank or not.

The system seemed to be working fine and the results were really impressive. However, they wanted to be sure that the system is able to generalize so they took another set of pictures with and without tanks. This time the system performed badly and wasn’t able to detect whether images contained a hidden tank or not.

It turned out that all the pictures without tanks were taken on a cloudy day, while the ones with tanks, on a sunny day. As a result, the network learned to recognize weather instead of hidden tanks.

It’s unclear whether the above situation actually happened (for more info see this link), but it’s very easy to imagine that such situations may be possible, and easily overlooked in real-world scenarios.